StoRM Backend installation and configuration guide

Introduction

The StoRM Backend service is the core of the StoRM services. It executes all SRM functionalities and takes care of file and space metadata management. It also enforces authorization permissions on files and interacts with external Grid services.

Install the service package

Grab the latest package from the StoRM repository. See instructions here.

Install the metapackage:

yum install storm-backend-mp

Service configuration

The Backend needs to be configured for two main aspects:

- service information: this section contains all the parameter regarding the StoRM service details. It relies on the storm.properties configuration file.

- storage information: this section contains all the information regarding Storage Area and other storage details. It relies on the namespace.xml file.

Both storm.properties and namespace.xml configuration file location is:

/etc/storm/backend-server

The storm.properties configuration file contains a list of key-value pairs that represent all the information needed to configure the StoRM Backend service. When the BackEnd starts, it writes into the log file the whole set of parameters read from the configuration file.

The namespace.xml configuration file contains the storage area info like what is needed to perform the mapping functionality, the storage area capabilities, which are the access and transfer protocols supported, etc..

Service information

Service Parameters

| Property Name | Description |

|---|---|

storm.service.SURL.endpoint |

List of comma separated strings identifying the StoRM Frontend endpoint(s). This is used by StoRM to understand if a SURL is local. E.g. srm://storm.cnaf.infn.it:8444/srm/managerv2. If you want to accept SURL with the ip address instead of the FQDN hostname you have to add the proper endpoint (E.g. IPv4: srm://192.168.100.12:8444/srm/managerv2 or IPv6: srm://[2001:0db8::1428:57ab]:8444/srm/managerv2. Default value: srm:// storm.service.FE-public.hostname:8444/srm/managerv2 |

storm.service.port |

SRM service port. Default: 8444 |

storm.service.SURL.default-ports |

List of comma separated valid SURL port numbers. Default: 8444 |

storm.service.FE-public.hostname |

StoRM Frontend hostname in case of a single Frontend StoRM deployment, StoRM Frontends DNS alias in case of a multiple Frontends StoRM deployment. |

storm.service.FE-list.hostnames |

Comma separated list os Frontend(s) hostname(s). Default: localhost |

storm.service.FE-list.IPs |

Comma separated list os Frontend(s) IP(s). E.g. 131.154.5.127, 131.154.5.128. Default: 127.0.0.1 |

pinLifetime.default |

Default PinLifetime in seconds used for pinning files in case of srmPrepareToPut or srmPrepareToGet operation without any pinLifetime specified. Default: 259200 |

pinLifetime.maximum |

Maximum PinLifetime allowed in seconds. Default: 1814400 |

fileLifetime.default |

Default FileLifetime in seconds used for VOLATILE file in case of SRM request without FileLifetime parameter specified. Default: 3600 |

extraslashes.gsiftp |

Add extra slashes after the “authority” part of a TURL for gsiftp protocol. |

extraslashes.rfio |

Add extra slashes after the “authority” part of a TURL for rfio protocol. |

extraslashes.root |

Add extra slashes after the “authority” part of a TURL for root protocol. |

extraslashes.file |

Add extra slashes after the “authority” part of a TURL for file protocol. |

synchcall.directoryManager.maxLsEntry |

Maximum number of entries returned by an srmLs call. Since in case of recursive srmLs results can be in order of million, this prevent a server overload. Default: 500 |

directory.automatic-creation |

Flag to enable automatic missing directory creation upon srmPrepareToPut requests. Default: false |

directory.writeperm |

Flag to enable directory write permission setting upon srmMkDir requests on created directories. Default: false |

default.overwrite |

Default file overwrite mode to use upon srmPrepareToPut requests. Default: A. Possible values are: N, A, D. Please note that N stands for Never, A stands for Always and D stands for When files differs. |

default.storagetype |

Default File Storage Type to be used for srmPrepareToPut requests in case is not provided in the request. Default: V. Possible values are: V, P, D. Please note that V stands for Volatile, P stands for Permanent and D stands for Durable. |

Garbage collector

The requests garbage collector process cleans database from the expired asynchronous SRM requests. The value of expired.request.time defines how many seconds are necessary to a request, after its submission, to be considered expired. An appropriate tuning is needed in case of high throughput of SRM requests required for long time.

| Property Name | Description |

|---|---|

purging |

Enable the request garbage collector. Default: true. |

purge.interval |

Time interval in seconds between successive purging run. Default: 600. |

purge.size |

Number of requests picked up for cleaning from the requests garbage collector at each run. This value is use also by Tape Recall Garbage Collector. Default: 800 |

purge.delay |

Initial delay before starting the requests garbage collection process, in seconds. Default: 10 |

expired.request.time |

Time in seconds to consider a request expired after its submission. Default: 604800 seconds (1 week). From StoRM 1.11.13 it is used also to identify how much time is needed to consider a completed recall task as cleanable. |

expired.inprogress.time |

Time in seconds to consider an in-progress ptp request as expired. Default: 2592000 seconds (1 month) |

Expired PUT requests Garbage Collector

This agent:

- transits ongoing srmPtP to SRM_FILE_LIFETIME_EXPIRED if the request pin-lifetime is expired (see

pinLifetime.defaultvariable into Service Information section). - transits to SRM_FAILURE the srmPtP that after

expired.inprogress.timeseconds are still in SRM_REQUEST_INPROGRESS.

The agent runs each transit.interval seconds and updates all the expired requests.

| Property Name | Description |

|---|---|

transit.interval |

Time interval in seconds between successive agent run. Default: 3000. |

transit.delay |

Initial delay before starting the agent process, in seconds. Default: 60 |

Reserved Space Garbage Collector

| Property Name | Description |

|---|---|

gc.pinnedfiles.cleaning.delay |

Initial delay before starting the reserved space, JIT ACLs and pinned files garbage collection process, in seconds. Default: 10 |

gc.pinnedfiles.cleaning.interval |

Time interval in seconds between successive purging run. Default: 300 |

Synchronous call

| Property Name | Description |

|---|---|

synchcall.xmlrpc.unsecureServerPort |

Port to listen on for incoming XML-RPC connections from Frontends(s). Default: 8080 |

synchcall.xmlrpc.maxthread |

Number of threads managing XML-RPC connection from Frontends(s). A well sized value for this parameter have to be at least equal to the sum of the number of working threads in all FrontEend(s). Default: 100 |

synchcall.xmlrpc.max_queue_size |

Max number of accepted and queued XML-RPC connection from Frontends(s). Default: 1000 |

synchcall.xmlrpc.security.enabled |

Whether the backend will require a token to be present for accepting XML-RPC requests. Default: true |

synchcall.xmlrpc.security.token |

The token that the backend will require to be present for accepting XML-RPC requests. Mandatory if synchcall.xmlrpc.token.enabled is true |

REST interface parameters

| Property Name | Description |

|---|---|

storm.rest.services.port |

REST services port. Default: 9998 |

storm.rest.services.maxthreads |

REST services max active requests. Default: 100 |

storm.rest.services.max_queue_size |

REST services max queue size of accepted requests. Default: 1000 |

Database connection parameters

| Property Name | Description |

|---|---|

storm.service.request-db.host |

Host for StoRM database. Default: localhost |

storm.service.request-db.username |

Username for database connection. Default: storm |

storm.service.request-db.passwd |

Password for database connection |

storm.service.request-db.properties |

Database connection URL properties. Default: serverTimezone=UTC&autoReconnect=true |

asynch.db.ReconnectPeriod |

Database connection refresh time intervall in seconds. Default: 18000 |

asynch.db.DelayPeriod |

Database connection refresh initial delay in seconds. Default: 30 |

persistence.internal-db.connection-pool.maxActive |

Database connection pool max active connections. Default: 10 |

persistence.internal-db.connection-pool.maxWait |

Database connection pool max wait time to provide a connection. Default: 50 |

SRM Requests Picker

| Property Name | Description |

|---|---|

asynch.PickingInitialDelay |

Initial delay before starting to pick requests from the DB, in seconds. Default: 1 |

asynch.PickingTimeInterval |

Polling interval in seconds to pick up new SRM requests. Default: 2 |

asynch.PickingMaxBatchSize |

Maximum number of requests picked up at each polling time. Default: 100 |

Worker threads

| Property Name | Description |

|---|---|

scheduler.crusher.workerCorePoolSize |

Crusher Scheduler worker pool base size. Default: 10 |

scheduler.crusher.workerMaxPoolSize |

Crusher Schedule worker pool max size. Default: 50 |

scheduler.crusher.queueSize |

Request queue maximum size. Default: 2000 |

scheduler.chunksched.ptg.workerCorePoolSize |

PrepareToGet worker pool base size. Default: 50 |

scheduler.chunksched.ptg.workerMaxPoolSize |

PrepareToGet worker pool max size. Default: 200 |

scheduler.chunksched.ptg.queueSize |

PrepareToGet request queue maximum size. Default: 2000 |

scheduler.chunksched.ptp.workerCorePoolSize |

PrepareToPut worker pool base size. Default: 50 |

scheduler.chunksched.ptp.workerMaxPoolSize |

PrepareToPut worker pool max size. Default: 200 |

scheduler.chunksched.ptp.queueSize |

PrepareToPut request queue maximum size. Default: 1000 |

scheduler.chunksched.bol.workerCorePoolSize |

BringOnline worker pool base size. Default: 50 |

scheduler.chunksched.bol.workerMaxPoolSize |

BringOnline Worker pool max size. Default: 200 |

scheduler.chunksched.bol.queueSize |

BringOnline request queue maximum size. Default: 2000 |

scheduler.chunksched.copy.workerCorePoolSize |

Copy worker pool base size. Default: 10 |

scheduler.chunksched.copy.workerMaxPoolSize |

Copy worker pool max size. Default: 50 |

scheduler.chunksched.copy.queueSize |

Copy request queue maximum size. Default: 500 |

Protocol balancing

| Property Name | Description |

|---|---|

gridftp-pool.status-check.timeout |

Time in milliseconds after which the status of a GridFTP has to be verified. Default: 20000 (20 secs) |

Tape recall

| Property Name | Description |

|---|---|

tape.recalltable.service.param.retry-value |

Default: retry-value |

tape.recalltable.service.param.status |

Default: status |

tape.recalltable.service.param.takeover |

Default: first |

Disk Usage Service

The Disk Usage Service has been introduced within StoRM v1.11.18 and allows administrators to enable periodic du calls on the storage area root directory in order to compute the used space size.

By default the service is disabled. Set storm.service.du.enabled in yoir storm.properties file to enable it.

| Property Name | Description |

|---|---|

storm.service.du.enabled |

Flag to enable disk usage service. Default: false |

storm.service.du.delay |

The initial delay before the service is started (seconds). Default: 60 |

storm.service.du.interval |

The interval in seconds between successive run. Default: 360. |

Example of output from internal log with an enabled du service with 0 delay and a week as interval:

04:26:50.920 - INFO [main] - Starting DiskUsage Service (delay: 0s, period: 604800s)

04:26:50.943 - INFO [main] - DiskUsage Service started.

...

04:26:50.961 - INFO [pool-6-thread-1] - DiskUsageTask for NESTED_TOKEN on /storage/nested started ...

04:26:51.364 - INFO [pool-6-thread-1] - DiskUsageTask for NESTED_TOKEN successfully ended in 0s with used-size = 4096 bytes

04:26:51.365 - INFO [pool-6-thread-1] - DiskUsageTask for IGI_TOKEN on /storage/igi started ...

04:26:51.455 - INFO [pool-6-thread-1] - DiskUsageTask for IGI_TOKEN successfully ended in 0s with used-size = 4096 bytes

04:26:51.458 - INFO [pool-6-thread-1] - DiskUsageTask for TAPE_TOKEN on /storage/tape started ...

04:26:51.624 - INFO [pool-6-thread-1] - DiskUsageTask for TAPE_TOKEN successfully ended in 0s with used-size = 8286 bytes

04:26:51.625 - INFO [pool-6-thread-1] - DiskUsageTask for TESTVOBIS_TOKEN on /storage/test.vo.bis started ...

04:26:51.784 - INFO [pool-6-thread-1] - DiskUsageTask for TESTVOBIS_TOKEN successfully ended in 0s with used-size = 4096 bytes

04:26:51.784 - INFO [pool-6-thread-1] - DiskUsageTask for NOAUTH_TOKEN on /storage/noauth started ...

04:26:51.857 - INFO [pool-6-thread-1] - DiskUsageTask for NOAUTH_TOKEN successfully ended in 0s with used-size = 4096 bytes

04:26:51.858 - INFO [pool-6-thread-1] - DiskUsageTask for TESTVO2_TOKEN on /storage/test.vo.2 started ...

04:26:51.993 - INFO [pool-6-thread-1] - DiskUsageTask for TESTVO2_TOKEN successfully ended in 0s with used-size = 4096 bytes

04:26:51.994 - INFO [pool-6-thread-1] - DiskUsageTask for TESTVO_TOKEN on /storage/test.vo started ...

04:26:52.100 - INFO [pool-6-thread-1] - DiskUsageTask for TESTVO_TOKEN successfully ended in 0s with used-size = 4108 bytes

Storage information

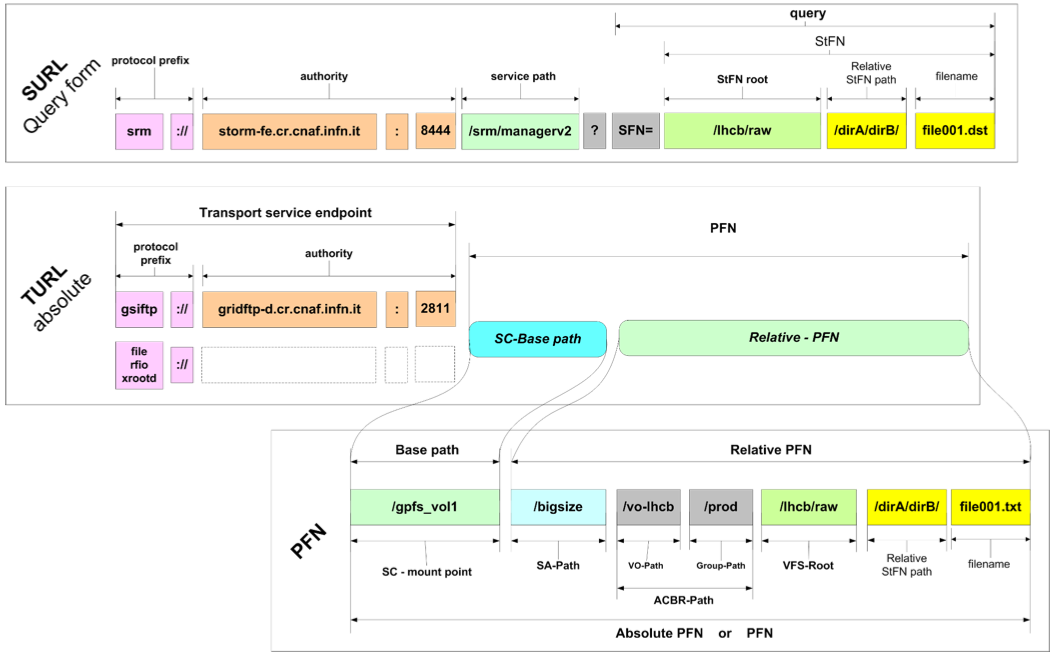

Information about storage managed by StoRM is stored in a configuration file named namespace.xml located at /etc/storm/backend-server/ on StoRM Backend host. One of the information stored into namespace.xml file is what is needed to perform the mapping functionality. The mapping functionality is the process of retrieving or building the transport URL (TURL) of a file addressed by a Site URL (SURL) together with grid user credential. The Fig 3 shows the different schema of SURL and TURL.

Fig. 3: Site URL and Transfer URL schema.

A couple of quick concepts from SRM:

- The SURL is the logical identifier for a local data entity

- Data access and data transfer are made through the TURLs

- The TURL identify a physical location of a replica

- SRM services retrieve the TURL from a namespace database (like DPNS component in DPM) or build it through other mechanisms (like StoRM)

In StoRM, the mapping functionality is provided by the namespace component (NS).

- The Namespace component works without a database.

- The Namespace component is based on an XML configuration.

- It relies on the physical storage structure.

The basic features of the namespace component are:

- The configuration is modular and structured (representation is based on XML)

- An efficient structure of namespace configuration lives in memory.

- No access to disk or database is performed

- The loading and the parsing of the configuration file occurs:

- at start-up of the back-end service

- when configuration file is modified

StoRM is different from the other solution, where typically, for every SRM request a query to the data base have to be done in order to establish the physical location of file and build the correct transfer URL. The namespace functions relies on two kind of parameters for mapping operations derived from the SRM requests, that are:

- the grid user credential (a subject or a service acting on behalf of the subject)

- the SURL

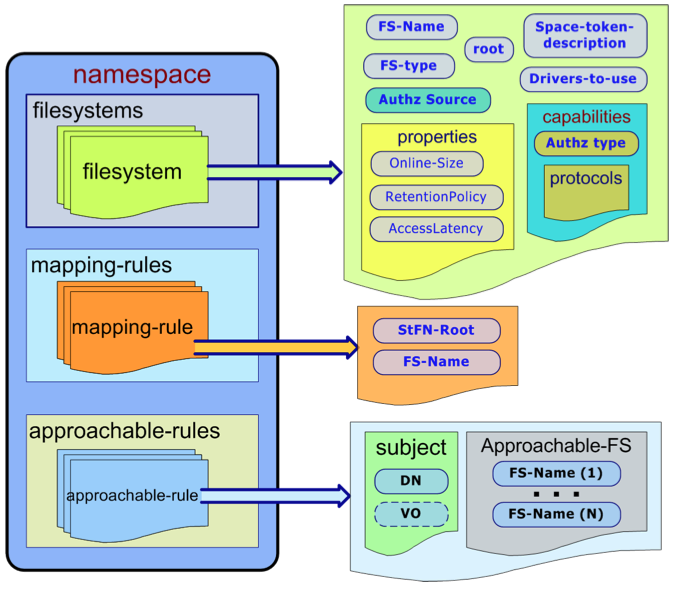

The Fig.4 shows the main concepts of Namespace Component:

- NS-Filesystem: is the representation of a Storage Area

- Mapping rule: represents the basic rule for the mapping functionalities

- Approachable rule: represents the coarse grain access control to the Storage Area.

Fig. 4: Namespace structure.

This is and example of the FS element:

<filesystem name="dteam-FS" fs_type="ext3">

<space-token-description>DTEAM_TOKEN</space-token-description>

<root>/storage/dteam</root>

<filesystem-driver>it.grid.storm.filesystem.swig.posixfs</filesystem-driver>

<spacesystem-driver>it.grid.storm.filesystem.MockSpaceSystem</spacesystem-driver>

<storage-area-authz>

<fixed>permit-all</fixed>

</storage-area-authz>

<properties>

<RetentionPolicy>replica</RetentionPolicy>

<AccessLatency>online</AccessLatency>

<ExpirationMode>neverExpire</ExpirationMode>

<TotalOnlineSize unit="GB" limited-size="true">291</TotalOnlineSize>

<TotalNearlineSize unit="GB">0</TotalNearlineSize>

</properties>

<capabilities>

<aclMode>AoT</aclMode>

<default-acl>

<acl-entry>

<groupName>lhcb</groupName>

<permissions>RW</permissions>

</acl-entry>

</default-acl>

<trans-prot>

<prot name="file">

<schema>file</schema>

</prot>

<prot name="gsiftp">

<id>0</id>

<schema>gsiftp</schema>

<host>gsiftp-dteam-01.cnaf.infn.it</host>

<port>2811</port>

</prot>

<prot name="gsiftp">

<id>1</id>

<schema>gsiftp</schema>

<host>gsiftp-dteam-02.cnaf.infn.it</host>

<port>2811</port>

</prot>

<prot name="rfio">

<schema>rfio</schema>

<host>rfio-dteam.cnaf.infn.it</host>

<port>5001</port>

</prot>

<prot name="root">

<schema>root</schema>

<host>root-dteam.cnaf.infn.it</host>

<port>1094</port>

</prot>

</trans-prot>

<pool>

<balance-strategy>round-robin</balance-strategy>

<members>

<member member-id="0"></member>

<member member-id="1"></member>

</members>

</pool>

</capabilities>

<defaults-values>

<space lifetime="86400" type="volatile" guarsize="291" totalsize="291"/>

<file lifetime="3600" type="volatile"/>

</defaults-values>

</filesystem>

Attributes meaning:

<filesystem name="dteam-FS" fs_type="ext3">: The name is the element identifier. It identifies this Storage Area in the namespace domains. The fs_type is the type of the filesystem the Storage Area is built on. Possible values are: ext3, gpfs. Please note that ext3 stands for all generic POSIX filesystem (ext3, Lustre, etc.)<space-token-description>DTEAM_TOKEN</space-token-description>: Storage Area space token description.<root>/storage/dteam</root>: Physical root directory of the Storage Area on the file system.<filesystem-driver>it.grid.storm.filesystem.swig.posixfs</filesystem-driver>: Driver loaded by the Backend for filesystem interaction. This driver is used mainly to set up ACLs on space and files.<spacesystem-driver>it.grid.storm.filesystem.MockSpaceSystem</spacesystem-driver>Driver loaded by the Backend for filesystem interaction. This is driver is used to manage space allocation. (E.g. on GPFS it uses the gpfs_prealloc() call).

Storage Area properties

<properties>

<RetentionPolicy>replica</RetentionPolicy>

<AccessLatency>online</AccessLatency>

<ExpirationMode>neverExpire</ExpirationMode>

<TotalOnlineSize unit="GB" limited-size="true">291</TotalOnlineSize>

<TotalNearlineSize unit="GB">0</TotalNearlineSize>

</properties>

in details:

<RetentionPolicy>replica</RetentionPolicy>: Retention Policy of the Storage Area. Possible values are: replica, custodial.<AccessLatency>online</AccessLatency>: Access Latency of the Storage Area. Possible values: online, nearline.<ExpirationMode>neverExpire</ExpirationMode>: Expiration Mode of the Storage Area. Deprecated.<TotalOnlineSize unit="GB" limited-size="true">291</TotalOnlineSize>Total on-line size of the Storage Area in GigaBytes. In case the attribute limited-size=”true”, StoRM enforce this limit at SRM level. When the space used for the Storage Area is at least equal to the size specified, every further SRM request to write files will fail with SRM_NO_FREE_SPACE error code.<TotalNearlineSize unit="GB">0</TotalNearlineSize>: Total near-line size of the Storage Area. This only means in case the Storage Area is in some way attached to a MSS storage system (such as TSM with GPFS).

Storage area capabilities

<aclMode>AoT</aclMode>

This is the ACL enforcing approach. Possible values are: AoT, JiT. In case of AheadOfTime(AoT) approach StoRM sets up a physical ACL on file and directories for the local group (gid) in which the user is mapped. (The mapping is done querying the LCMAPS service con the BE machine passing both user DN and FQANs). The group ACL remains for the whole lifetime of the file. In case of JustInTime(JiT) approach StoRM sets up and ACL for the local user (uid) the user is mapped. The ACL remains in place only for the lifetime of the SRM request, then StoRM removes it. (This is to avoid to grant access to pool account uid in case of reallocation on different users.)

<default-acl>

<acl-entry>

<groupName>lhcb</groupName>

<permissions>RW</permissions>

</acl-entry>

</default-acl>

This is the Default ACL list. A list of ACL entry (that specify a local user (uid) or group id (gid) and a permission (R,W,RW). This ACL are automatically by StoRM at each read or write request. Useful for use cases where experiment want to allow local access to file on group different than the one that made the SRM request operation.

#### Access and Transfer protocol supported

The file protocol:

<prot name="file">

<schema>file</schema>

</prot>

The file protocol means the capability to perform local access on file and directory. If user performs an SRM request (srmPtG or srmPtP) specifying the file protocol, and it is supported by the selected Storage Area, StoRM return a TURL structured as:

file:///atlas/atlasmcdisk/filename

This TURL can be used through GFAL or other SRM clients to perform a direct access on the file.

<prot name="gsiftp">

<id>0</id>

<schema>gsiftp</schema>

<host>gridftp-dteam.cnaf.infn.it</host>

<port>2811</port>

</prot>

The gsiftp protocol:

The gsiftp protocol means the GridFTP transfer system from Globus widely adopted in many Grid environments. This capability element contains all the information about the GridFTP server to use with this Storage Area. Site administrator can decide to have different server (or pools of server) for different Storage Areas. The id is the server identifier to be used when defining a pool. The schema have to be gsiftp. host is the hostname of the server (or the DNS alias used to aggregate more than one server). The port is the GridFTP server port, typically 2811. If user performs an SRM request (srmPtG or srmPtP) specifying the gsiftp protocol, and it is supported by the selected Storage Area, StoRM return a TURL structured as:

gsiftp://gridftp-dteam.cnaf.infn.it:2811/atlas/atlasmcdisk/filename.

The rfio protocol:

<prot name="rfio">

<schema>rfio</schema>

<host>rfio-dteam.cnaf.infn.it</host>

<port>5001</port>

</prot>

This capability element contains all the information about the rfio server to use with this Storage Area. Like for GridFTP, site administrator can decide to have different server (or pools of server) for different Storage Areas. The id is the server identifier. The schema have to be rfio. host is the hostname of the server (or the DNS alias used to aggregate more than one server). The port is the rfio server port, typically 2811. If user performs an SRM request (srmPtG or srmPtP) specifying the rfio protocol, and it is supported by the selected Storage Area, StoRM return a TURL structured as:

rfio://rfio-dteam.cnaf.infn.it:5001/atlas/atlasmcdisk/filename.

The root protocol:

<prot name="root">

<schema>root</schema>

<host>root-dteam.cnaf.infn.it</host>

<port>1094</port>

</prot>

This capability element contains all the information about the root server to use with this Storage Area. Like for other protocols, site administrator can decide to have different server (or pools of server) for different Storage Areas. The id is the server identifier. The schema have to be root. host is the hostname of the server (or the DNS alias used to aggregate more than one server). The port is the root server port, typically 1094. If user performs an SRM request (srmPtG or srmPtP) specifying the root protocol, and it is supported by the selected Storage Area, StoRM return a TURL structured as:

root://root-dteam.cnaf.infn.it:1094/atlas/atlasmcdisk/filename.

Pool of protocol servers

<pool>

<balance-strategy>round-robin</balance-strategy>

<members>

<member member-id="0"></member>

<member member-id="1"></member>

</members>

</pool>

Here is defined a pool of protocol servers. Within the pool element pool members are declared identified by their id, the list of members have to be homogenious with respect to their schema. This id is the server identifier specified in the prot element. The balance-strategy represent the load balancing strategy with which the pool has to be managed. Possible values are: round-robin, smart-rr, random and weight.

NOTE: Protocol server pooling is currently available only for gsiftp servers.

Load balancing strategies details:

-

round-robin At each TURL construction request the strategy returns the next server following the round-robin approach: a circular list with an index starting from the head and incrementd at each request.

-

smart-rr An enhanced version of round-robin. The status of pool members is monitored and maintained in a cache. Cache entries has a validity life time that is refreshed when expired. If the member chosen by round-robin is marked as not responsive another iteration of round-robin is performed.

-

random At each TURL construction request the strategy returns a random member of the pool.

-

weight An enhanced version of round-robin. When a server is chosen the list index will not be moved forward (and the server will be choosen again in next request) for as many times as specified in its weight.

NOTE: The weight has to be specified in a weight element inside the member element:

<pool>

<balance-strategy>WEIGHT</balance-strategy>

<members>

<member member-id="0">

<weight>5</weight>

</member>

<member member-id="1">

<weight>1</weight>

</member>

</members>

</pool>

Storage space information default values

<defaults-values>

<space lifetime="86400" type="volatile" guarsize="291" totalsize="291"/>

<file lifetime="3600" type="volatile"/>

</defaults-values>

Mapping rules

A mapping rule define how a certain NS-Filesystem, that correspond to a Storage Area in SRM meaning of terms, is exposed in Grid:

<mapping-rules>

<map-rule name="dteam-maprule">

<stfn-root>/dteam</stfn-root>

<mapped-fs>dteam-FS</mapped-fs>

</map-rule>

</mapping-rules>

The <stfn-root> is the path used to build SURL referring to that Storage Area. The mapping rule above define that the NS-Filesystem named dteam-FS has to be mapped in the /dteam SURL path. Following the NS-Filesystem element defined in the previous section, the SURL:

srm://storm-fe.cr.cnaf.infn.it:8444/dteam/testfile

following the root expressed in the dteam-FS NF-Filesystem element, is mapped in the physical root path on the file system:

/storage/dteam

This approach works similar to an alias, from the SURL stfn-root path to the NS-Filesystem root.

Approachable rules

Approachable rules defines which users (or which class of users) can approach a certain Storage Area, always expressed as NS-Filesystem element. If a user can approach a Storage Area, he can use it for all SRM operations. If a user is not allowed to approach a Storage Area, and he try to specify it in any SRM request, he will receive an SRM_INVALID_PATH. In practics, if a user cannot approach a Storage Area, for him that specific path does not exists at all. Here is an example of approachable rule for the dteam-FS element:

<approachable-rules>

<app-rule name="dteam-rule">

<subjects>

<dn>*</dn>

<vo-name>dteam</vo-name>

</subjects>

<approachable-fs>dteam-FS</approachable-fs>

<space-rel-path>/</space-rel-path>

</app-rule>

</approachable-rules>

-

<dn>*</dn>means that everybody can access the storage Area. Here you can define regular expression on DN fields to define more complex approachable rules. -

<vo-name>dteam</vo-name>means that only users belonging to the VO dteam will be allowed to access the Storage Area. This entry can be a list of comma separeted VO-name.

Used space initialization

An administrator can initialize the status of a Storage Area by editing a configuration file, the used-space.ini configuration file, that it’s parsed once at Backend’s bootstrap time.

See this configuration example for more info.

Configure the service with YAIM

StoRM Backend can be configured with YAIM tool on CentOS 6 platform.

Read more about YAIM tool here and what are the general YAIM variables for a StoRM deployment.

StoRM Backend specific YAIM variables can be found in the following file:

/opt/glite/yaim/exaples/siteinfo/services/se_storm_backend

You have to set at least these variables in your site-info.def:

STORM_BACKEND_HOSTSTORM_DEFAULT_ROOTSTORM_DB_PWD

and check the other variables to evaluate if you like the default set or if you want to change those settings.

The following table summaries YAIM variables for StoRM Backend component.

| Var. Name | Description |

|---|---|

STORM_ACLMODE |

ACL enforcing mechanism (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_ACLMODE variable. Available values: aot, jit (use aot for WLCG experiments).Optional variable. Default value: aot |

STORM_ANONYMOUS_HTTP_READ |

Storage Area anonymous read access via HTTP. Note: you may change the settings for each SA acting on STORM_[SA]_ANONYMOUS_HTTP_READ variable.Optional variable. Available values: true, false. Default value: false |

STORM_AUTH |

Authorization mechanism (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_AUTH variable Available values: permit-all, deny-all, FILENAME.Optional variable. Default value: permit-all |

STORM_BACKEND_HOST |

Host name of the StoRM Backend server. Mandatory. |

STORM_BACKEND_REST_SERVICES_PORT |

StoRM backend server rest port. Optional variable. Default value: 9998 |

STORM_BE_XMLRPC_TOKEN |

Token used y Frontend in communication to the StoRM Backend |

STORM_CERT_DIR |

Host certificate directory for StoRM Backend service. Optional variable. Default value: /etc/grid-security/STORM_USER |

STORM_DEFAULT_ROOT |

Default directory for Storage Areas. Mandatory. |

STORM_DB_HOST |

Host for database connection. Optional variable. Default value: localhost |

STORM_DB_PWD |

Password for database connection. Mandatory. |

STORM_DB_USER |

User for database connection. Optional variable. Default value: storm |

STORM_FRONTEND_HOST_LIST |

StoRM Frontend service host list: SRM endpoints can be more than one virtual host different from STORM_BACKEND_HOST (i.e. dynamic DNS for multiple StoRM Frontends).Mandatory variable. Default value: STORM_BACKEND_HOST |

STORM_FRONTEND_PATH |

StoRM Frontend service path. Optional variable. Default value: /srm/managerv2 |

STORM_FRONTEND_PORT |

StoRM Frontend service port. Optional variable. Default value: 8444 |

STORM_FRONTEND_PUBLIC_HOST |

StoRM Frontend service public host. It’s used by StoRM Info Provider to publish the SRM endpoint into the Resource BDII. Mandatory variable. Default value: STORM_BACKEND_HOST |

STORM_FSTYPE |

File System Type (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_FSTYPE variable.Optional variable. Available values: posixfs, gpfs and test. Default value: posixfs |

STORM_GRIDFTP_POOL_LIST |

GridFTP servers pool list (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_GRIDFTP_POOL_LIST variable.ATTENTION: this variable define a list of pair values space-separated: host weight, e.g.: STORM_GRIDFTP_POOL_LIST="host1 weight1, host2 weight2, host3 weight3" Weight has 0-100 range; if not specified, weight will be 100.Mandatory variable. Default value: STORM_BACKEND_HOST |

STORM_GRIDFTP_POOL_STRATEGY |

Load balancing strategy for GridFTP server pool (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_GRIDFTP_POOL_STRATEGY variable.Optional variable. Available values: round-robin, smart-rr, random, weight. Default value: round-robin |

STORM_INFO_FILE_SUPPORT |

If set to false, the following variables prevent the corresponding protocol to be published by the StoRM gip. Optional variable. Available values: true, false. Default value: true |

STORM_INFO_GRIDFTP_SUPPORT |

If set to false, the following variables prevent the corresponding protocol to be published by the StoRM gip. Optional variable. Available values: true, false. Default value: true |

STORM_INFO_RFIO_SUPPORT |

If set to false, the following variables prevent the corresponding protocol to be published by the StoRM gip. Optional variable. Available values: true, false. Default value: false |

STORM_INFO_ROOT_SUPPORT |

If set to false, the following variables prevent the corresponding protocol to be published by the StoRM gip. Optional variable. Available values: true, false. Default value: false |

STORM_INFO_HTTP_SUPPORT |

If set to false, the following variables prevent the corresponding protocol to be published by the StoRM gip. Optional variable. Available values: true, false. Default value: false |

STORM_INFO_HTTPS_SUPPORT |

If set to false, the following variables prevent the corresponding protocol to be published by the StoRM gip. Optional variable. Available values: true, false. Default value: false |

STORM_NAMESPACE_OVERWRITE |

This parameter tells YAIM to overwrite namespace.xml configuration file. Optional variable. Available values: true, false. Default value: true |

STORM_RFIO_HOST |

Rfio server (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_RFIO_HOST variable.Optional variable. Default value: STORM_BACKEND_HOST |

STORM_ROOT_HOST |

Root server (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_ROOT_HOST variable.Optional variable. Default value: STORM_BACKEND_HOST |

STORM_SERVICE_SURL_DEF_PORTS |

Comma-separated list of managed SURL’s default ports used to check SURL validity. Optional variable. Default value: 8444 |

STORM_SIZE_LIMIT |

Limit Maximum available space on the Storage Area (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_SIZE_LIMIT variable. Optional variable. Available values: true, false. Default value: true |

STORM_STORAGEAREA_LIST |

List of supported Storage Areas. Usually at least one Storage Area for each VO specified in VOS should be created. Optional variable. Default value: VOS |

STORM_STORAGECLASS |

Storage Class type (default value for all Storage Areas). Note: you may change the settings for each SA acting on STORM_[SA]_STORAGECLASS variable. Optional variable. Available values: T0D1, T1D0, T1D1. No default value. |

STORM_SURL_ENDPOINT_LIST |

This is a comma separated list of the SRM endpoints managed by the Backend. A SURL is accepted only if this list contains the endpoint specified. It’s an optional variable with default value: srm://STORM_FRONTEND_PUBLIC_HOST:STORM_FRONTEND_PORT/STORM_FRONTEND_PATH. So, if you want to accept requests with incoming SURLs that has the ip address instead of the FQDN hostname, add the full srm endpoint to this list. |

STORM_ENDPOINT_QUALITY_LEVEL |

Endpoint maturity level to be published by the StoRM gip. Optional variable. Default value: 2 |

STORM_ENDPOINT_SERVING_STATE |

Endpoint serving state to be published by the StoRM gip. Optional variable. Default value: 4 |

STORM_ENDPOINT_CAPABILITY |

Capability according to OGSA to be published by the StoRM gip. Optional variable. Default value: data.management.storage |

STORM_WEBDAV_POOL_LIST |

Publish the WebDAV endpoints listed by this variable. Default value: https://STORM_BACKEND_HOST:8443,http://STORM_BACKEND_HOST:8085 |

Storage Area variables

Then, for each Storage Area listed in the STORM_STORAGEAREA_LIST variable, which is not the name of a valid VO,

you have to edit the STORM_[SA]_VONAME compulsory variable. SA has to be written in capital letters as in the other variables included in the site-info.def file, otherwise default values will be used.

For the DNS-like names, that use special characters as ‘.’ or ‘-‘ you have to remove the ‘.’ and ‘-‘.

For example theSAvalue for the storage area “test.vo” must be TESTVO:

STORM_TESTVO_VONAME=test.vo

For each storage area SA listed in STORM_STORAGEAREA_LIST you have to set at least these variables: STORM_[SA]_ONLINE_SIZE

You can edit the optional variables summarized in the following table:

| Var. Name | Description |

|---|---|

STORM_[SA]_VONAME |

Name of the VO that will use the Storage Area. Use the complete name, e.g., “lights.infn.it” to specify that there is no VO associated to the storage area (it’s readable and writable from everyone - less than other filters). This variable becomes mandatory if the value of SA is not the name of a VO. |

STORM_[SA]_ANONYMOUS_HTTP_READ |

Storage Area anonymous read access via HTTP. Optional variable. Available values: true, false. Default value: false |

STORM_[SA]_ACCESSPOINT |

List space-separated of paths exposed by the SRM into the SURL. Optional variable. Default value: SA |

STORM_[SA]_ACLMODE |

See STORM_ACLMODE definition. Optional variable. Default value: STORM_ACLMODE |

STORM_[SA]_AUTH |

See STORM_AUTH definition. Optional variable. Default value: STORM_AUTH |

STORM_[SA]_DEFAULT_ACL_LIST |

A list of ACL entries that specifies a set of local groups with corresponding permissions (R, W, RW) using the following syntax: groupname1:permission1 [groupname2:permission2] […] |

STORM_[SA]_DN_C_REGEX |

Regular expression specifying the format of C (Country) field of DNs that will use the Storage Area. Optional variable. |

STORM_[SA]_DN_O_REGEX |

Regular expression specifying the format of O (Organization name) field of DNs that will use the Storage Area. Optional variable. |

STORM_[SA]_DN_OU_REGEX |

Regular expression specifying the format of OU (Organizational Unit) field of DNs that will use the Storage Area. Optional variable. |

STORM_[SA]_DN_L_REGEX |

Regular expression specifying the format of L (Locality) field of DNs that will use the Storage Area. Optional variable. |

STORM_[SA]_DN_CN_REGEX |

Regular expression specifying the format of CN (Common Name) field of DNs that will use the Storage Area. Optional variable. |

STORM_[SA]_FILE_SUPPORT |

Enable the corresponding protocol. Optional variable. Default value: STORM_INFO_FILE_SUPPORT |

STORM_[SA]_GRIDFTP_SUPPORT |

Enable the corresponding protocol. Optional variable. Default value: STORM_INFO_GRIDFTP_SUPPORT |

STORM_[SA]_RFIO_SUPPORT |

Enable the corresponding protocol. Optional variable. Default value: STORM_INFO_RFIO_SUPPORT |

STORM_[SA]_ROOT_SUPPORT |

Enable the corresponding protocol. Optional variable. Default value: STORM_INFO_ROOT_SUPPORT |

STORM_[SA]_HTTP_SUPPORT |

Enable the corresponding protocol. Optional variable. Default value: STORM_INFO_HTTP_SUPPORT |

STORM_[SA]_HTTPS_SUPPORT |

Enable the corresponding protocol. Optional variable. Default value: STORM_INFO_HTTPS_SUPPORT |

STORM_[SA]_FSTYPE |

See STORM_[SA]_FSTYPE definition. Optional variable. Available values: posixfs, gpfs. Default value: STORM_FSTYPE |

STORM_[SA]_GRIDFTP_POOL_LIST |

See STORM_GRIDFTP_POOL_LIST definition. Optional variable. Default value: STORM_GRIDFTP_POOL_LIST |

STORM_[SA]_GRIDFTP_POOL_STRATEGY |

See STORM_GRIDFTP_POOL_STRATEGY definition. Optional variable. Default value: STORM_GRIDFTP_POOL_STRATEGY |

STORM_[SA]_ONLINE_SIZE |

Total size assigned to the Storage Area Expressed in GB. Must be an integer value. Mandatory. |

STORM_[SA]_ORGS |

Comma separated list of the supported authorization servers used by StoRM WebDAV with bearer tokens. No default value. |

STORM_[SA]_QUOTA |

Enables the quota management for the Storage Area and it works only on GPFS filesystem. Optional variable. Available values: true, false. Default value: false |

STORM_[SA]_QUOTA_DEVICE |

GPFS device on which the quota is enabled. It is mandatory if STORM_[SA]_QUOTA variable is set. No default value. |

STORM_[SA]_QUOTA_USER |

GPFS quota scope. Only one of the following three will be used (the first one with the highest priority in this order: USER, then GROUP, then FILESET). Optional variable. No default value. |

STORM_[SA]_QUOTA_GROUP |

GPFS quota scope. Only one of the following three will be used (the first one with the highest priority in this order: USER, then GROUP, then FILESET). Optional variable. No default value. |

STORM_[SA]_QUOTA_FILESET |

GPFS quota scope. Only one of the following three will be used (the first one with the highest priority in this order: USER, then GROUP, then FILESET). Optional variable. No default value. |

STORM_[SA]_RFIO_HOST |

See STORM_RFIO_HOST definition. Optional variable. Default value: STORM_RFIO_HOST |

STORM_[SA]_ROOT |

Physical storage path for the VO. Optional variable. Default value: STORM_DEFAULT_ROOT/SA |

STORM_[SA]_ROOT_HOST |

See STORM_ROOT_HOST definition. Optional variable. Default value: STORM_ROOT_HOST |

STORM_[SA]_SIZE_LIMIT |

See STORM_SIZE_LIMIT definition. Default value: STORM_SIZE_LIMIT |

STORM_[SA]_STORAGECLASS |

See STORM_STORAGECLASS definition. Available values: T0D1, T1D0, T1D1, null. No default value. |

STORM_[SA]_TOKEN |

Storage Area token, e.g: LHCb_RAW, INFNGRID_DISK. No default value. |

STORM_[SA]_USED_ONLINE_SIZE |

Storage space currently used in the Storage Area expressed in Bytes. Must be an integer value. Used by YAIM to populate used-space.ini file. |

The most important (and mandatory) parameters of storm.properties are configured by default trough YAIM with a standard installation of StoRM. All the other parameters are optionals and can be used to make advanced tuning of the Backend. To change/set a new value, or add a new parameter, just edit the storm.properties file and restart the Backend daemon.

To configure the service with YAIM, run the following command:

/opt/glite/yaim/bin/yaim -c -s SITEINFO.def -n se_storm_backend

Configure the service with Puppet

The StoRM puppet module can be used to configure the service on CentOS 7 platform.

The module contains the storm::backend class that installs the metapackage storm-backend-mp and allows site administrator to configure storm-backend-server service.

Prerequisites: A MySQL or MariaDB server with StoRM databases must exist. Databases can be empty. If you want to use this module to install MySQL client and server and init databases, please read about StoRM database utility class.

The Backend class installs:

- storm-backend-mp

- storm-dynamic-info-provider

Then, the Backend class configures storm-backend-server service by managing the following files:

- /etc/storm/backend-server/storm.properties

- /etc/storm/backend-server/namespace.xml

- /etc/systemd/system/storm-backend-server.service.d/storm-backend-server.conf

- /etc/systemd/system/storm-backend-server.service.d/filelimit.conf

and deploys StoRM databases. In addiction, this class configures and run StoRM Info Provider by managing the following file:

- /etc/storm/info-provider/storm-yaim-variables.conf

The whole list of StoRM Backend class parameters can be found here.

Example of StoRM Backend configuration:

class { 'storm::backend':

hostname => 'backend.test.example',

frontend_public_host => 'frontend.test.example',

transfer_protocols => ['file', 'gsiftp', 'webdav'],

xmlrpc_security_token => 'NS4kYAZuR65XJCq',

service_du_enabled => true,

srm_pool_members => [

{

'hostname' => 'frontend.test.example',

}

],

gsiftp_pool_members => [

{

'hostname' => 'gridftp.test.example',

},

],

webdav_pool_members => [

{

'hostname' => 'webdav.test.example',

},

],

storage_areas => [

{

'name' => 'dteam-disk',

'root_path' => '/storage/disk',

'access_points' => ['/disk'],

'vos' => ['dteam'],

'online_size' => 40,

},

{

'name' => 'dteam-tape',

'root_path' => '/storage/tape',

'access_points' => ['/tape'],

'vos' => ['dteam'],

'online_size' => 40,

'nearline_size' => 80,

'fs_type' => 'gpfs',

'storage_class' => 'T1D0',

},

],

}

Starting from Puppet module v2.0.0, the management of Storage Site Report has been improved.

Site administrators can add script and cron described in the how-to using a defined type storm::backend::storage_site_report.

For example:

storm::backend::storage_site_report { 'storage-site-report':

report_path => '/storage/info/report.json', # the internal storage area path

minute => '*/20', # set cron's minute

}

StoRM database class

The StoRM database utility class installs mariadb server and releated rpms and configures mysql service by managing the following files:

- /etc/my.cnf.d/server.cnf;

- /etc/systemd/system/mariadb.service.d/limits.conf.

The whole list of StoRM Database class parameters can be found here.

Examples of StoRM Database usage:

class { 'storm::db':

root_password => 'supersupersecretword',

storm_password => 'supersecretword',

}

Logging

The Backend log files provide information on the execution process of all SRM requests. All the Backend log files are placed in the /var/log/storm directory. Backend logging is based on logback framework. Logback provides a way to set the level of verbosity depending on the use case. The level supported are FATAL, ERROR, INFO, WARN, DEBUG.

The file

/etc/storm/backend-server/logging.xml

contains the following information:

<logger name="it.grid.storm" additivity="false">

<level value="INFO" />

<appender-ref ref="PROCESS" />

</logger>

the value can be set to the desired log level. Please be careful, because logging operations can impact on system performance (even 30% slower with DEBUG in the worst case). The suggest logging level for production endpoint is INFO. In case the log level is modified, the Backend has not to be restarted to read the new value.

StoRM Backend log files are the followings:

- storm-backend.log, the main log file with each single request and errors are logged;

- heartbeat.log, an aggregated log that shows the number of synch and asynch requests occoured from startup and on last minute;

- storm-backend-metrics.log, a finer grained monitoring of incoming synchronous requests, contains metrics for individual types of synchronous requests.

The storm-backend.log file

The main Backend service log file is:

- storm-backend.log. All the information about the SRM execution process, error or warning are logged here depending on the log level. At startup time, the BE logs here all the storm.properties value, this can be useful to check value effectively used by the system. After that, the BE logs the result of the namespace initialization, reporting errors or misconfiguration. At the INFO level, the BE logs for each SRM operation at least who have request the operation (DN and FQANs), on which files (SURLs) and the operation result. At DEBUG level, much more information are printed regarding the status of many StoRM internal component, depending on the SRM request type. DEBUG level has to be used carefully only for troubleshooting operation. If ERROR or FATAL level are used, the only event logged in the file are due to error condition.

The heartbeat.log file

StoRM provides a bookkeeping framework that elaborates informations on SRM requests processed by the system to provide user-friendly aggregated data that can be used to get a quick view on system health.

- heartbeat.log. This useful file contains information on the SRM requests process by the system from its startup, adding new information at each beat. The beat time interval can be configured, by default is 60 seconds. At each beat, the heartbeat component logs an entry.

A heartbeat.log entry example:

[#.....71 lifetime=1:10.01]

Heap Free:59123488 SYNCH [500] ASynch [PTG:2450 PTP:3422]

Last:( [#PTG=10 OK=10 M.Dur.=150] [#PTP=5 OK=5 M.Dur.=300] )

| Log | Meaning |

|---|---|

#......71 |

Log entry number |

lifetime=1:10.01 |

Lifetime from last startup, hh:mm:ss |

Heap Free:59123488 |

BE Process free heap size in Bytes |

SYNCH [500] |

Number of Synchronous SRM requests executed in the last beat |

ASynch [PTG:2450 PTP:3422] |

Number of srmPrepareToGet and srmPrepareToPut requests executed from start-up. |

Last:( [#PTG=10 OK=10 M.Dur.=150] |

Number of srmPrepareToGet executed in the last beat, with the number of request terminated with success (OK=10) and average time in millisecond (M.Dur.=150) |

[#PTP=5 OK=5 M.Dur.=300] |

Number of srmPrepareToPut executed in the last beat, with number of request terminated with success and average time in milliseconds. |

This log information can be really useful to gain a global view on the overall system status. A tail on this file is the first thing to do if you want to check the health of your StoRM installation. From here you can understand if the system is receiving SRM requests or if the system is overloaded by SRM request or if PtG and PtP are running without problem or if the interaction with the filesystem is exceptionally low (in case the M.Dur. is much more than usual).

The storm-backend-metrics.log file

A finer grained monitoring of incoming synchronous requests is provided by this log file. It contains metrics for individual types of synchronous requests.

A storm-backend-metrics.log entry example:

16:57:03.109 - synch.ls [(m1_count=286, count=21136) (max=123.98375399999999, min=4.299131, mean=9.130859862802883, p95=20.736006, p99=48.147704999999995) (m1_rate=4.469984951030006, mean_rate=0.07548032009470132)] duration_units=milliseconds, rate_units=events/second

| Log | Meaning |

|---|---|

synch.ls |

Type of operation. |

m1_count=286 |

Number of operation of the last minute. |

count=21136 |

Number of operations from last startup. |

max=123.98375399999999 |

Maximum duration of last bunch. |

min=4.299131 |

Minimum duration of last bunch. |

mean=9.130859862802883 |

Duration average of last bunch |

p95=20.736006 |

The 95% of last bunch operations lasted less then 20.73ms |

p99=48.147704999999995 |

The 99% of last bunch operations lasted less then 48.14ms |

Here is the list of current logged operations:

| Operation | Description |

|---|---|

synch |

Synch operations summary |

synch.af |

Synch srmAbortFiles operations |

synch.ar |

Synch srmAbortRequest operations |

synch.efl |

Synch srmExtendFileLifetime operations |

synch.gsm |

Synch srmGetSpaceMetadata operations |

synch.gst |

Synch srmGetSpaceToken operations |

synch.ls |

Synch srmLs operations |

synch.mkdir |

Synch srmMkDir operations |

synch.mv |

Synch srmMv operations |

synch.pd |

Synch srmPd operations |

synch.ping |

Synch srmPing operations |

synch.rf |

Synch srmRf operations |

synch.rm |

Synch srmRm operations |

synch.rmDir |

Synch srmRmDir operations |

fs.aclOp |

Acl set/unset on filesystem operations |

fs.fileAttributeOp |

File attribute set/unset on filesystem operations |

ea |

Extended attributes operations |